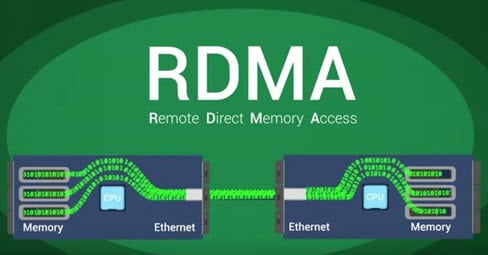

As organizations have to deal with a staggering amount of data, speed and efficiency have become necessary in data processing. The need for faster data transfer and reduced latency is critical as the demand for higher performance in networking and storage increases. One technology that is changing the storage industry landscape is Remote Direct Memory Access (RDMA).

RDMA enables direct memory access from the memory of one computer into that of another without involving either one’s operating system; it makes copies possible — and not a single unnecessary. Eliminating kernel involvement translates to quicker data transfers and lower latency, making RDMA a boon in modern HPC environments. This article will examine the technologies that enable RDMA, how they function, and why they are changing the face of networking and storage.

What Is Remote Direct Memory Access?

Remote Direct Memory Access (RDMA) is a technology that allows data to move between computers at high speeds by allowing one computer to read the memory of another without involving either system’s CPU in the transfer.

In a classic data transfer system, the data is copied around many times as it passes through layers of the OS, bringing the CPU on board at each passing. It also brings latency to the process since it involves the CPU in handling data transfer, which consumes CPU resources. However, RDMA evades these inefficiencies by enabling data to flow directly between the memory of one system and the other with minimal CPU involvement.

An RDMA network is popular for high-performance computing (HPC) environments, data centers, and cloud computing, where efficient, low-latency data transfers are paramount. This makes RDMA a perfect fit for applications such as databases, financial services, or anything machine learning-based, where fast networking is critical.

How RDMA Works

Before explaining RDMA, let us consider how data transfer happens when you do not use it. Generally, data bounces around between multiple layers inside the operating system before reaching the network stack. It can either be copied many times or processed by the CPU. This process consists of the following steps:

- Copying data to the CPU’s buffer: Data is first copy-supported in the memory buffer of the CPU, which is costly performance.

- Transferring data through the network stack: The data has to go through the network protocol stack (TCP/IP), which also adds delay.

- Sending the data over the network: The data is then transferred over the network, introducing another latency level.

Instead, with RDMA, the CPU and network stack are bypassed; data is transferred between the memories of two systems. It allows apps to talk to one another without the CPU having to be involved at all times. This works because new RDMA-capable Network Interface Cards (NICs) have replaced the duties of the CPU, processing communication out in hardware—all to free up precious CPU cycles and eliminate latency.

RDMA supports multiple protocols that enable memory access, such as:

- InfiniBand: This standard is common in high-performance environments for RDMA implementations, as it boasts high throughput and low latency.

- RDMA over Converged Ethernet (RoCE): RoCE enables RDMA to operate over standard Ethernet networks, opening up use by data centers that do not wish to invest in custom hardware.

- Internet Wide Area RDMA Protocol (iWARP): iWARP allows RDMA to be used across conventional TCP/IP networks, which may be beneficial within environments where the Ethernet infrastructure is already present.

Key Benefits of RDMA

For this reason, RDMA technology has some merits which make it a promising candidate for networking and storage solutions:

Reduced Latency

The best part about RDMA is its capability to reduce the latency. When RDMA bypasses the CPU to access it directly, it reduces delays incurred by CPU operations and networking protocol stacks. This low-latency capability is valuable in settings where response time is critical (such as financial trading platforms, where a few milliseconds can turn into profits or losses).

Increased Data Throughput

With RDMA, the technology uses up completely the network bandwidth for data transfer, which could be done thousands of times faster than conventional methods. Applications that deal with large data sets, such as machine learning and big data analytics, will require high data throughput as they need access to the required input at a very high frequency for processing.

Reduced CPU Load

RDMA allows data transfer work to be offloaded from the CPU to the network interface card (NIC), which means more CPU resources for other important tasks. As opposed to traditional networking models, in which the CPU needs to participate in all phases of data transfer, RDMA removes this requirement so that an application run on top of these purely concentrates on doing logical processing instead of moving data around.

Efficient Memory Utilization

RDMA performs zero-copy data movement directly between memory locations, reducing the memory footprint and improving system efficiency overall. This can considerably enhance performance by lowering the memory resource pressure for memory-intensive applications.

Scalability

RDMA offers low-latency and high-throughput characteristics, making it well-suited for larger data environments where scalability is desirable. RDMA is an enabling technology for handling larger workloads at higher data transfer rates since it allows businesses to scale their infrastructure without losing performance.

Applications of RDMA in Networking and Storage

RDMA capabilities make it perfect for a diverse range of use cases, especially within high-performance computing and data-heavy environments:

Cloud Computing and Data Centers

RDMA enables faster data transfers with lower latency, allowing for more efficient data centers in cloud computing. Cloud providers need to offer consistent performance to their customers, and this ability becomes increasingly critical. Many cloud providers are now beginning to embed RDMA into their infrastructure to respond to the need for high-speed storage and networking.

Database Management Systems

Note: RDMA is significant in Systems, and any systems mentioned utilize extensive read-write functionality, which is the case with databases. For example, RDMA-based databases will lead to lower query times and better performance, which is essential for transactional applications such as online retail and financial services.

Artificial Intelligence and Machine Learning

Model training for machine learning and AI algorithms requires large data sets, and RDMA can significantly speed up the data transfer process. RDMA has been used to support faster data transfers and enable lower latency and higher throughput, which ultimately leads to getting the ML model predictions much quicker, which is critical for a few of the real-time machine-learning applications, such as recommendation systems and placement.

High-Performance Computing (HPC)

RDMA has become prevalent in HPC domains where low-latency, high-throughput inter-node communication is crucial. RDMA, in turn, enables researchers and scientists to run faster and more efficient simulations and models, making HPC applications transfer data between nodes faster than ever.

Challenges and Limitations of RDMA

Despite its benefits, some issues with RDMA cannot be ignored:

- Hardware Requirements: RDMA needs special hardware like RDMA-capable NICs to increase initial set-up costs. However, RDMA over Ethernet (RoCE) brings fresh hope, providing exciting solutions by offering an RDMA capability on the Ethernet network.

- Complex Implementation: Deploying and configuring RDMA can be more complex than conventional networking. In more extensive and complex environments, you will require proper expertise to ensure the system is optimized.

- Compatibility: Not all applications are RDMA-compatible, which can limit its usefulness in some environments.

Conclusion

Remote Direct Memory Access (RDMA) is revolutionizing networking and storage by allowing near-wire-speed transfers without the usual latencies associated with data transfer. RDMA can transfer data without involving the CPU and avoids network stack overheads. It provides faster transfer rates with lower latency, making it an essential technology for high-performance computing, data centers, and Cloud.

Although there are challenges related to RDMA, the benefits associated with efficiency, scalability, and reduced CPU load that can be achieved through utilizing RDMA present a whole new paradigm in networking and storage.

With the growing demand across industries for rapid data processing, RDMA will likely be widely adopted. Whether in cloud computing settings or broad applications to artificial intelligence, RDMA can potentially speed up workloads across industries and, therefore, will help mold the future of data.

To read more content like this, explore The Brand Hopper

Subscribe to our newsletter