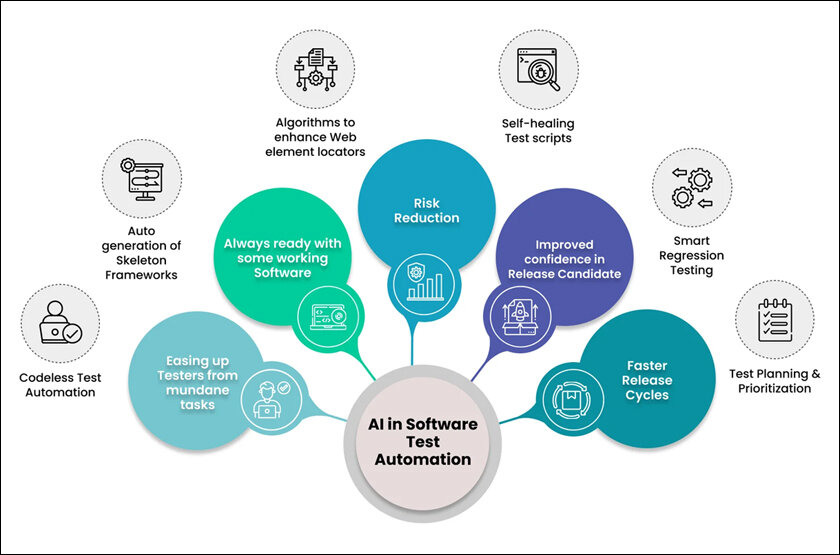

Generative AI is changing the way QA builds, runs, & scales the testing pipelines. However, with organizations continuing to speed up their digital transformation, traditional QA approaches, which tend to be manual, repetitive, and time-consuming, are unable to meet constantly increasing expectations of faster, more reliable, and continuous delivery.

Generative AI in software testing is a strategic shift with the ability to intelligently interpret requirements, automatically create test assets and make predictive decisions. The current landscape even lays a foundation for the future of QA with automated workflows and AI-testers being put in place.

Paradigm Shift: The New Centrality of Generative AI in QA

QA transformed from manual testing to automation in the past 10 years, but automation still needs a lot of detailed scripting, maintenance, and domain knowledge. This is where generative AI creates never-before-seen value. Now, before we explore further, we have to understand that using generative models is not only about speeding up automation but transforming the testing process into a continuous, contextual, and self-optimizing system.

Such generative AI models can interpret all natural-language requirements, understand UI structures, learn app behavior and create tests that mimic real user flows. With this intelligence, we have completely removed the human-created bottlenecks of test design, and this almost completely accelerates the speed of coverage. QA teams no longer have to compromise: speed and accuracy, at scale.

Transforming the End-to-End Test Case Design Leveraging Generative Intelligence

Traditionally, test case design required a thorough understanding of the functionality, user flow, and a bone-wearying scripting process. And the advent of generative systems denotes a transference of that responsibility from human beings to machines. The beauty of this model is that testers are now supervisors, directing the AI instead of writing every single detail manually.

With the ability to analyze requirements, user stories, & acceptance criteria, and application logs, generative AI can create structured, executable test cases. You get consistency, less duplication, and standardization over a wide test suite. Moreover, these models can detect specification gaps that are often overlooked by manual reviewers.

From a single requirement, a generative engine can create a variety of tests, such as:

- Functional test cases

- Boundary value and negative scenarios

- API-level validations

- Regression-ready automated scripts

- Design file-based UI interaction flows

This saves time early on in the development phase and ensures the most complex, exploratory, or business-critical aspects are adequately validated by testers.

AI-Based Script Generation: Reinventing the Way You Approach Automation Development

As human beings, we snatch speed in nowhere, so automation frameworks traditionally needed high-end coding skills and always need to be maintained whenever there is a change in UI or logic. But as generative AI evolves, the writing and management of automation scripts becomes comparatively trivial.

AI decodes application behavior using DOM analysis, API patterns, logs, and actions undertaken by users. It means that instead of writing elaborate code manually, scripts can then be generated from simple descriptions. Better yet, you could have used a generative model to automatically update old locators, repair broken steps and regenerate affected scripts after app upgrades.

This change brings a drastic reduction in the overall maintenance headache and guarantees that automated tests persist alongside the product. And it evolves test automation from scalable to adaptable, which is a core need of agile and DevOps teams.

Autonomous Suite Optimization For Improving Regression Testing

Over time, regression testing is extremely useful but even more overwhelming, since test suites grow dramatically over time. Some of these challenges are redundant scripts, overlap in cases, long execution cycles and high maintenance. Generative AI adds intelligence by looking at the suite as a whole and determining which tests are needed.

AI is capable of classifying tests based on the type of user behavior, type of functionality, and historical failed tests, determining which cases actually need to be executed in a given cycle. So this will then allow a much more focused regression suite, and therefore, optimized.

In addition, machine learning models automatically identify the areas with the most risk by monitoring execution patterns and performance data. QA teams can now easily implement risk-based testing without the need to run the entire suite – achieving maximum coverage with minimum effort.

Improve Software Default Prediction & Root Cause Analysis

The greatest benefit of generative AI is that it can examine the application behavior, its logs/metrics, and execution patterns to detect defects earlier in the lifecycle than conventional testing. These models do more than detect failures; they predict the regions that are expected to break.

AI-driven defect prediction helps teams:

- Identify modules prone to regression

- A probable risk forecasting based on the historical pattern of code changes

- Executes tests based on a history of failure probabilities

In addition to prediction, AI speeds things up with root cause analysis. Google explains that Generative models analyze stack traces, logs, and execution flows to deliver an immediate snapshot of possible root causes. Instead of taking hours to replicate issues or understand logs, teams receive insights in seconds.

Innovating Exploratory Testing With Smart Guidance

Exploratory testing is an activity that is very human-centric; however, generative AI enhances the very human nature of this domain but will never replace it. Smart models can also act as guidance to the testers by proposing meaningful journeys, unseen journeys or areas of the application that are not sufficiently tested. This will ensure that the exploratory efforts are targeted and yield productive results.

Then AI also captures the notes written in natural language and automatically generates structured documentation that can transform the exploratory sessions into valuable assets. This solves the long-sought dilemma of exploratory tests being undocumented or subjective.

Use Generative Synthesis to Create More Intelligent Test Data

For ages, QA teams have been battling with test data challenges to be able to find relevant, safe and diverse sets of data, which takes a lot of time and effort. Generative AI makes the generation of synthetic data smooth, fast and secure.

AI models can produce:

- Edge-case values

- Negative and boundary datasets

- High-volume synthetic records

- Submissions of specific types of related data, including finance, healthcare, and e-commerce

Generative AI, on the other hand, guarantees insights are not only diverse but aligned closely with actual usage by drawing context from requirements and user patterns. This increases the speed of end-to-end validations, improves coverage, and encourages the practice of privacy-sensitive testing.

Emergence of Test Execution Driven By AI, Autonomous QA

With generative models becoming increasingly capable in reasoning, memory, and decision-making, the execution of tests itself becomes more intelligent. AI models can assess conditions and modify their behavior in real-time rather than simply execute scripts.

Especially in dynamic UI, where items move around, or the same flow should render different outcomes based on conditions. Since AI-enabled tests do not fail, they automatically adjust their pathways and keep executing.

AI agent testers deepen the principle of autonomy in that they not only automate the testing of applications but also act as autonomous test executors that learn how applications behave. TestMu AI (Formerly LambdaTest) and similar platforms are paving the way for this transition through the provision of AI-powered agents that can navigate through the application, understand the UI hierarchy, and perform smart validations. This fills the divide between manual and automated testing to ensure teams build at scale, with confidence.

Seamless Integration Into CI/CD Pipelines

With organizations relying more on continuous integration and delivery pipelines, generative AI seems to go hand in hand to fulfill the requirement. AI-powered testing workflows built into CI/CD complement CI/CD with adaptive test suites that are triggered with every code push.

Generative AI reinforces this alignment between the business and DevOps by allowing for:

- Writing scripts instantly while developing new features

- Regression suites are automatically adjusted based on changes

- Risk-based smarter test prioritization

- Faster feedback loops for developers

In conclusion, this results in enhanced resilience and responsiveness of the development pipeline.

Five Ways Generative AI Improves a Cloud-Based Testing Ecosystem

These days, applications have to be tested on thousands of browser–OS–device combinations. Generative AI, in conjunction with cloud testing platforms, drives scale, meets the consistency challenge, and delivers speed in unison.

This is where platforms such as TestMu AI come into the picture.

KaneAI transforms QA by using generative AI to create, execute, and maintain automated tests across web and mobile platforms. It turns high-level testing intent into executable tests, handles dynamic application changes through self-healing, and provides actionable insights on test results. KaneAI integrates into CI/CD pipelines to improve reliability, speed, and coverage of automated testing.

Features:

- Intent‑driven test creation: Generates automated tests directly from natural language descriptions.

- Scenario enrichment: Produces edge cases, negative tests, and variations automatically.

- Full-stack validation: Covers UI, API, and database layers in unified workflows.

- Accessibility testing support: Detects accessibility issues and validates critical behaviors.

- Self-healing automation: Adapts to changes in locators, DOM structure, or dynamic elements.

- Execution monitoring: Provides real‑time reporting and test result analytics.

- Test modularization: Enables reusable, maintainable test components.

- CI/CD integration: Fits seamlessly into continuous integration and deployment pipelines.

- Performance validation: Identifies slow or flaky steps to optimize test reliability.

- Versioned test management: Tracks test changes and maintains historical execution data.

Richer Quality Insights and Continuous Improvement by Leveraging Data

Data-driven decision-making is the future of QA. Generative AI improves quality analytics through analysis and understanding of execution patterns, user behavior statistics, defect history, and other risk indicators. That means moving from static dashboards to dynamic inputs that are updated with every build by the teams.

Generative intelligence empowers QA teams to predict quality trends, uncover potential process bottlenecks, and suggest ways to improve processes. This establishes an endless learning feedback cycle, as it means quality is no longer a goal to achieve, but an ever-evolving target that can be quantified.

Intelligent automation for security and compliance testing

Security testing sometimes has to rely on deep expertise and extensive checking, but generative AI opens new possibilities of making security testing more approachable and intelligent by automating more of it. By learning from historical incidents and public threat data, AI can evaluate logs, keep tabs on trends, and be proactive in identifying vulnerabilities.

- Creating fuzzing inputs

- Identifying suspicious patterns

- Suggesting areas vulnerable to attacks

- Generating compliance-oriented scenarios

AI does not take away the job of seasoned security auditors, but rather helps them accelerate repetitive checks and increases the chance of detecting vulnerabilities sooner.

Getting QA Teams Ready for an AI-Augmented Future

With Generative AI, tester roles don’t disappear; they get reinvented. QA professionals evolve into AI influencers who steer the AI, verify the results, and ensure that the products are working at the same level of intelligence as the business. To adapt, testers have to gain AI fluency and analytical skills while also embracing a new level of knowledge about how products act.

All organizations that see potential with GenAI should spend time training, experimenting, and adopting iteratively. Gradual confidence buildup starting from selective AI use cases, automated case creation or self-healing scripts, to begin with, and then scaling up.

Final Thoughts: The Intelligent QA of Tomorrow Is Today

The adoption of generative AI in software testing represents a fundamental shift in how software quality is achieved within the enterprise. The transformation is going to be as deep and irreversible as from automated test creation to agent/randomized execution to predictive analytics to better regression cycles and AI-supervised delivery pipelines.

With greater adoption and democratization of AI-enabled testing through platforms like TestMu AI, organizations can now scale smartly, establish higher independence from manual processes and execute autonomous continuous QA. The software quality of the future will be characterized not by human toil alone, but the effortless partnership of testers and cognitive systems, introducing a time of quicker releases, blunter precision, and unmatched digital steadiness.

To read more content like this, explore The Brand Hopper

Subscribe to our newsletter